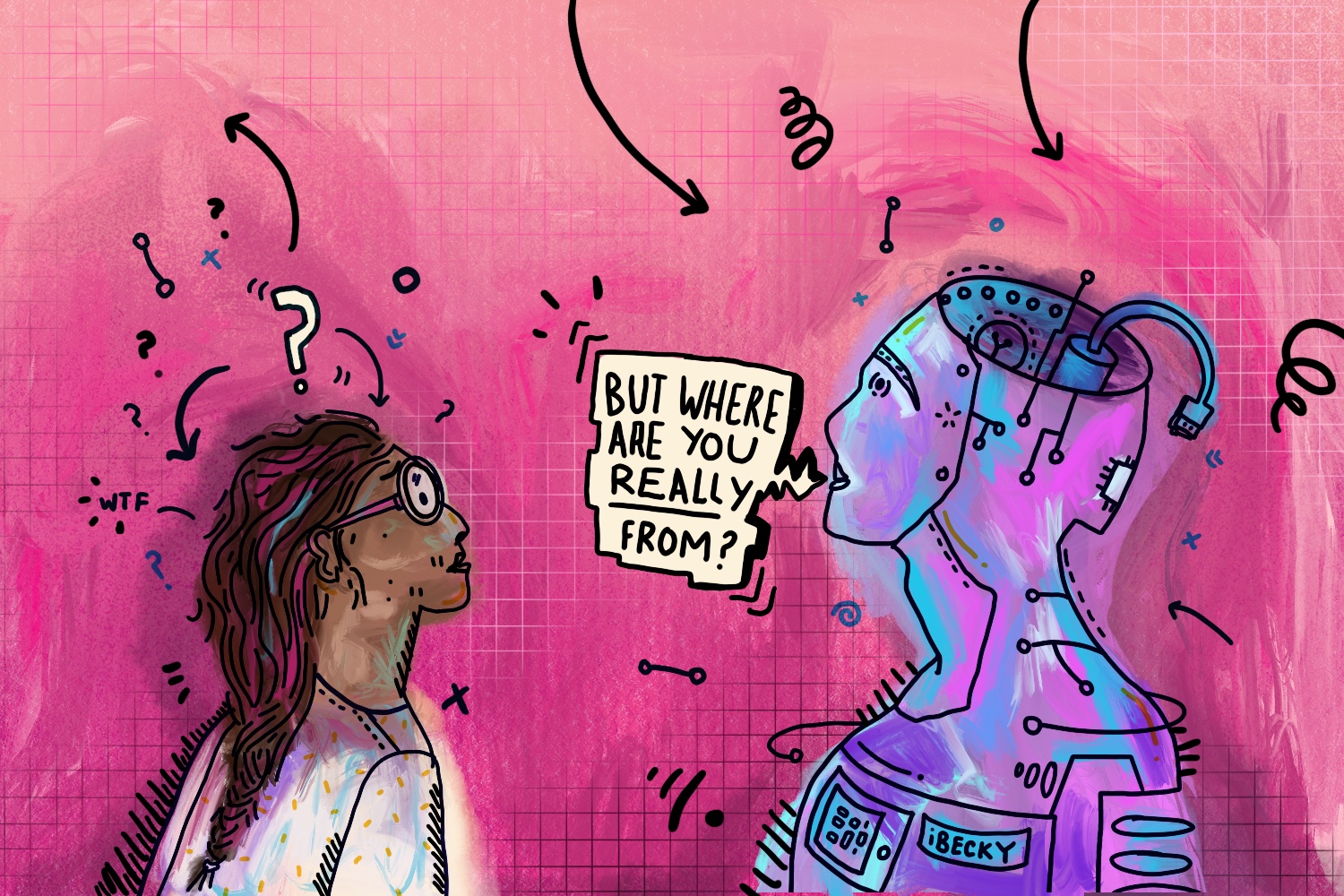

Illustration by Soofiya.com

I have this fear that artificial intelligence is going to kill me and my friends. We’ve all heard the doomsday theories – like killer robots plotting revenge and taking over the world – but that’s not what I’m afraid of. What scares me is that intelligence designed by humans seems to be exactly that: human.

The tech industry is notorious for being predominantly white and male so it’s no surprise that unconscious biases held there have become embedded in and reflected by the products that are built. Despite growing awareness of the inequality and lack of diversity in tech, direct action to address race and gender gaps has been too slow to compete with developments in artificial intelligence (AI) or it’s use in our daily lives. We’re beginning to see the damaging consequences of the widespread use of machines intended to serve humanity that in reality are only representative of a tiny and homogenous group.

Using AI in facial recognition has worldwide application in security, surveillance and crime prevention yet researcher Joy Buolamwini showed the facial recognition software used by IBM, Microsoft, and Face++ contains bias against women and people of colour. Facial recognition software recognises male faces far more accurately than female ones, especially when these faces are white – error rates were over 19% when recognising people with darker skin. Unsurprisingly, the system performed especially badly when presented with the intersection between race and gender, evidenced by a whopping 34.4% error margin when recognising dark skinned women. What happens if national security becomes dependent on a system that can recognise white males with just 1% error yet failed to recognise Michelle Obama?

The use of AI in surveillance also compounds my fear of homophobic targeting when travelling abroad. Facial recognition software can now essentially identify a person’s sexuality; in one study AI could correctly identify a gay person by their face with up to 81% accuracy. Data-centric attempts to categorise something as fluid as sexuality concern me. The potential threat to the privacy and safety of queer communities should such technology be used in countries with anti-LGBTQI+ laws leaves me questioning the motives for building what is essentially an automated “gaydar”. The impact of such “research” on non-binary and trans communities could be devastating – how does this technology impact their agency and self-identification?

It’s not just my face being failed by biased tech; the oversight of women’s bodies hides in plain sight. It doesn’t take more than a quick Google search to identify hundreds of anecdotal examples of voice recognition software only responding to men’s voices, whether that’s in our cars or on our phones. When Apple first launched their “universal” health app they didn’t think to include a period tracker. Apple users in the US, where, according to the NSVRC, one in five women will be raped at some point in their lives compared to one in 71 men, found that Siri replied, “I don’t know what you mean by ‘I was raped’”, yet was able to provide assistance in a vast range of other emergency situations.

Scarier still, should I happen to find myself in intensive care as a result of the tech in my life failing me, guess which same technology gets a say in whether or not I am given medical attention? A study released last month shows new AI models can use machine learning to predict the likelihood a patient will die in hospital and can help doctors identify and prioritise patients. You did read that correctly – algorithms biased against people of colour could be used to help doctors decide who would most benefit from immediate intervention in a medical emergency. AI can also predict the risk of premature death and can be used to help families and healthcare professionals make difficult decisions regarding end of life care and withdrawing life support. I’m not being hyperbolic when I say AI may literally kill me.

I’m not sure what the equivalent of unconscious bias training is for a computer system but it might look something like the ‘What-If’ tool Google released to identify the exact moment that bias kicks in during machine-based decision making. I can’t help but think identifying when biases occur misses the point. It feels ironic that we’re now faced with a need to build de-biasing technology into our machines when what we should be focussing on is eliminating the homogeneity that allowed for this to arise in the first place. Call me paranoid, but I also believe we should be developing tools that mitigate the potential for harm that inevitably goes hand-in-hand with the existence of technology that can be used as an automated profiling service.

Talking about the lack of diversity in tech has seemingly become mainstream but not enough is being done to stop our machines from perpetuating cycles of disadvantage and discrimination in the meantime. With the prolific use of machine learning to inform decision making in almost every aspect of society, AI’s “white guy problem” is starting to feel more like a problem for people like us.