Facial recognition can’t tell black and brown people apart – but the police are using it anyway

Tech experts say facial recognition has severe race and gender biases. But from February 2019, the London Met are rolling it out across the UK capital. Why?

Moya Lothian McLean

27 Jan 2020

Credit: Canva/Kemi Alemoru

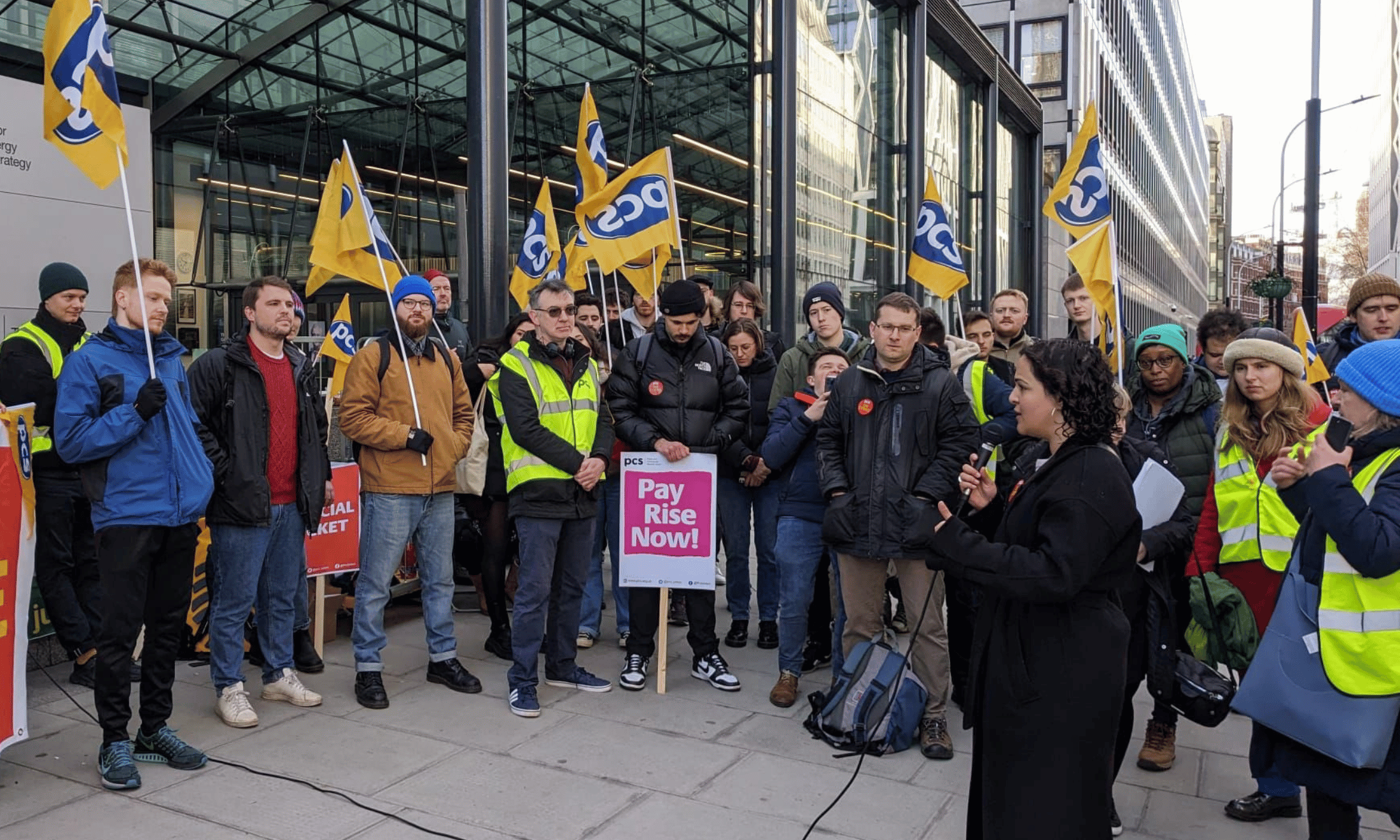

On Friday, London’s Metropolitan Police Force announced in a smug tweet that they would be rolling out live facial recognition technology across the city, starting from February.

Immediately, words like “Orwellian” and “dystopian” were being bandied around – with good reason. The technology – which works (in theory) by allowing faces captured on CCTV to be matched in real-time to image databases and watch-lists – is a huge invasion of privacy. The potential for abuse is so great that days before the Met announced they’d decided to implement widespread use of facial recognition in the capital, the European Commission declared the opposite: that they were so worried about the risks facial recognition posed in its current, unregulated state, they were considering supporting a five-year ban on its use in public areas in EU countries.

But there’s two factors that need screaming above all others when it comes to the debate surrounding facial recognition.

One: it’s racist.

Two: it doesn’t even work.

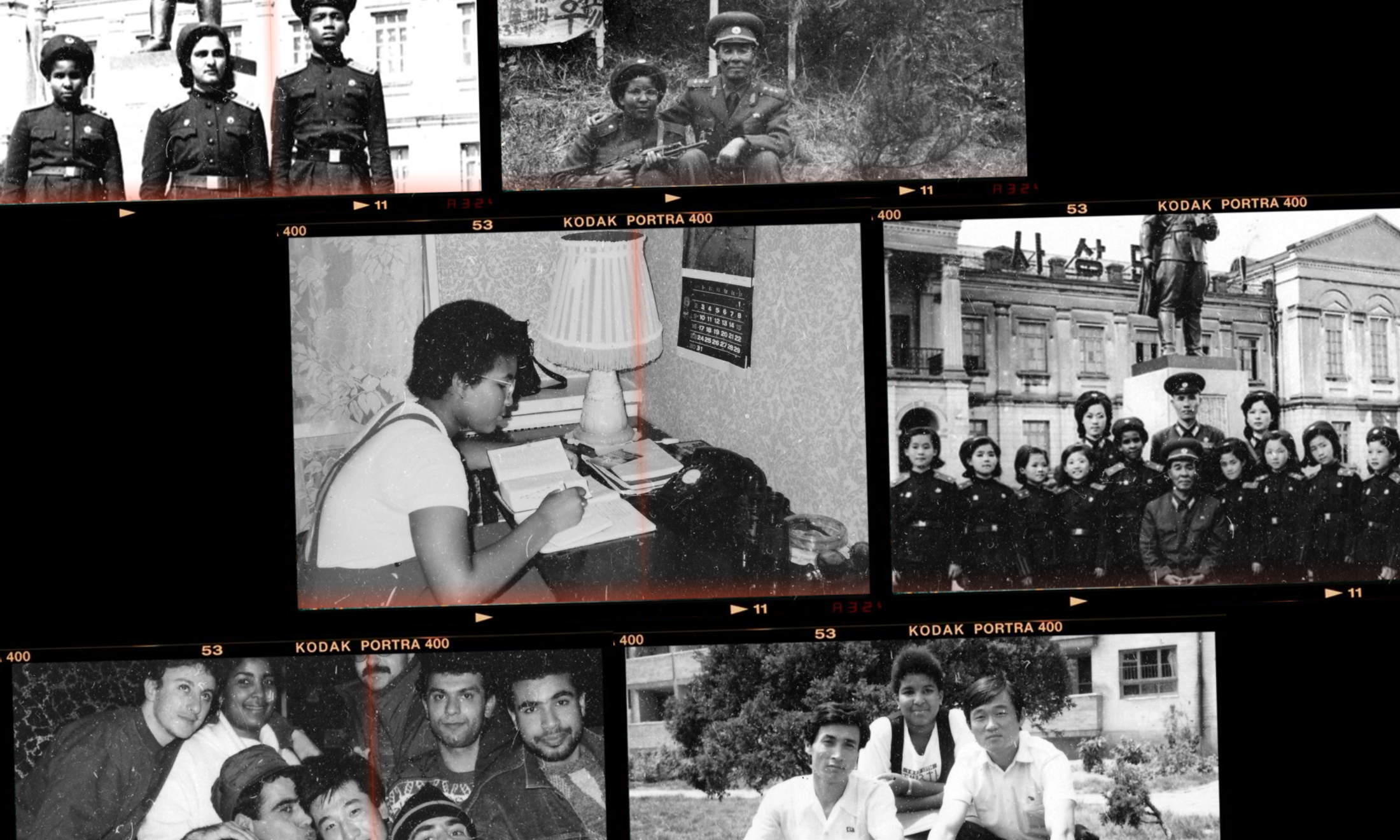

Technology never exists in a vacuum. For now, humans are still responsible for the production of new digital systems; and that means they come into being with all the biases and fallibility of their creators baked right into their code. Facial recognition software is no different. Studies have found it to be hugely flawed when it comes to accurately recognising black and brown individuals, even more so if they happen to be a woman as well.

“Studies found people from ethnic minorities were 100 times more likely to be misidentified than white individuals by facial recognition software”

In 2018, Massachusetts Institute of Technology graduate student Joy Buolamwini led a series of studies investigating facial recognition systems from the likes of Microsoft, IBM and Amazon. She found them to be endemically racist. Initial research found that the tech only mismatched images of light-skinned men 0.8% of the time. When it came to black and brown individuals though, the rate of inaccuracy shot up. Darker-skinned women had a misidentification rate of 34%.

Further research by the US National Institute of Standards and Technology in December 2019 examined facial recognition systems provided by 99 tech developers around the world. They found people from ethnic minorities were 100 times more likely to be misidentified than white individuals. Shock.

So how does NeoFace, the facial recognition system currently used by the Met, fare when it comes to correctly identifying ethnic minorities? Well, we don’t know, because the Met have repeatedly refused to test that aspect of the software on at least four different occasions, using excuses like “limited funds”, despite being aware of facial recognition’s racial blindspot since 2014.

But we are aware of the general (in)accuracy of their software, thanks to FOI requests from campaign groups. Which currently stands at error rate of 81%, according to independent trials. That’s four out of five people wrongly identified by the Met’s facial recognition system who then face being hauled away by officers. And when those individuals are from demographics who are already seen as suspects, you’re looking at a situation that will not only result in tragic miscarriages of justice: it will actively encourage them.

“When existing and discriminatory police processes combine with faulty tech like facial recognition this results in a system where innocent people are flagged up by computers, hauled off the street and then have their biometric data extracted from them”

What everyone must remember is the context in which facial recognition is being implemented. The Met was judged “institutionally racist” in 1999. Twenty years on and its employees are still 86% white, in the most diverse city in the UK. Facial recognition is being employed at a time when police powers that criminalise black and brown people are not being tackled but instead expanded. Like suspicionless stop and search, which even former Home Secretary Theresa May admitted was “unfair, especially to young, black men”, and tried to reform.

But under her successor, Boris Johnson, police have had powers to detain and search people without reason – which black people are 40 times more likely to be subject to than white people – increased, ostensibly to tackle knife crime (on which stop and search has no measurable impact). London Mayor Sadiq Khan also backed increased stop and search proposals in 2018, despite previously promising to roll back stop and search use.

When combined with faulty tech like facial recognition this results in a system where innocent people are being flagged up by computers, hauled off the street for an intrusive search and then having their biometric data extracted from them. It doesn’t matter if detainees protest because pre-existing prejudice means police simply don’t believe them.

We don’t need to stretch the imagination to envisage what that future would look like either. It’s already here. The Met have been trialling facial recognition since 2016, including deployments at Notting Hill Carnival and Romford. Civil liberties organisation Big Brother Watch were present during a Romford trial in 2018 and not only discovered the technology had a 100% inaccuracy rate, but witnessed the Met stop a 14-year-old black schoolboy after facial recognition wrongly matched his image to an individual on one of their watchlists. He was held by four plainclothes police officers, questioned, searched and fingerprinted to check his identity.

It didn’t matter that the police got it wrong; the boy’s data had already been sucked into their discriminatory databases like the (illegal) Gangs Matrix, where innocent people are flagged up as potential offenders simply because they press play on a drill track on YouTube. As a result young black men face penalties like being unable to access benefits, higher education and social housing. Imagine queuing up a 67 song and a year later being blocked from going to university. It’s a violation of the most fundamental rights to privacy and freedom of expression going.

“This is how criminalisation of black and brown people works and the rollout of flawed facial recognition tech is only going to speed up the process”

Once someone is in police systems, they’re a suspect for life, leaving them open to further persecution at the hands of the law – even if the only reason they’re thanks to police error.

This is how criminalisation of black and brown people works and the rollout of flawed facial recognition tech is only going to speed up the process. The police know that facial recognition software isn’t fit for purpose and disproportionately targets communities already under siege from them. So why steam ahead? We must assume it’s because they simply don’t care and judge this a price worth paying.

Opposition to the Met’s citywide use of facial recognition must be loud and furious; sign petitions, write letters to local representatives and exercise the right to opt out of your image being captured (police claim participation is by consent, although they’ve previously fined people who have hidden their faces from the cameras). Educate yourselves too on adjacent police powers, like stop and search, that will be used in conjunction with the technology – and the rights anyone who is detained has to object. Read up, resist racist recognition software – and buy a balaclava.

Britain’s policing was built on racism. Abolition is unavoidable

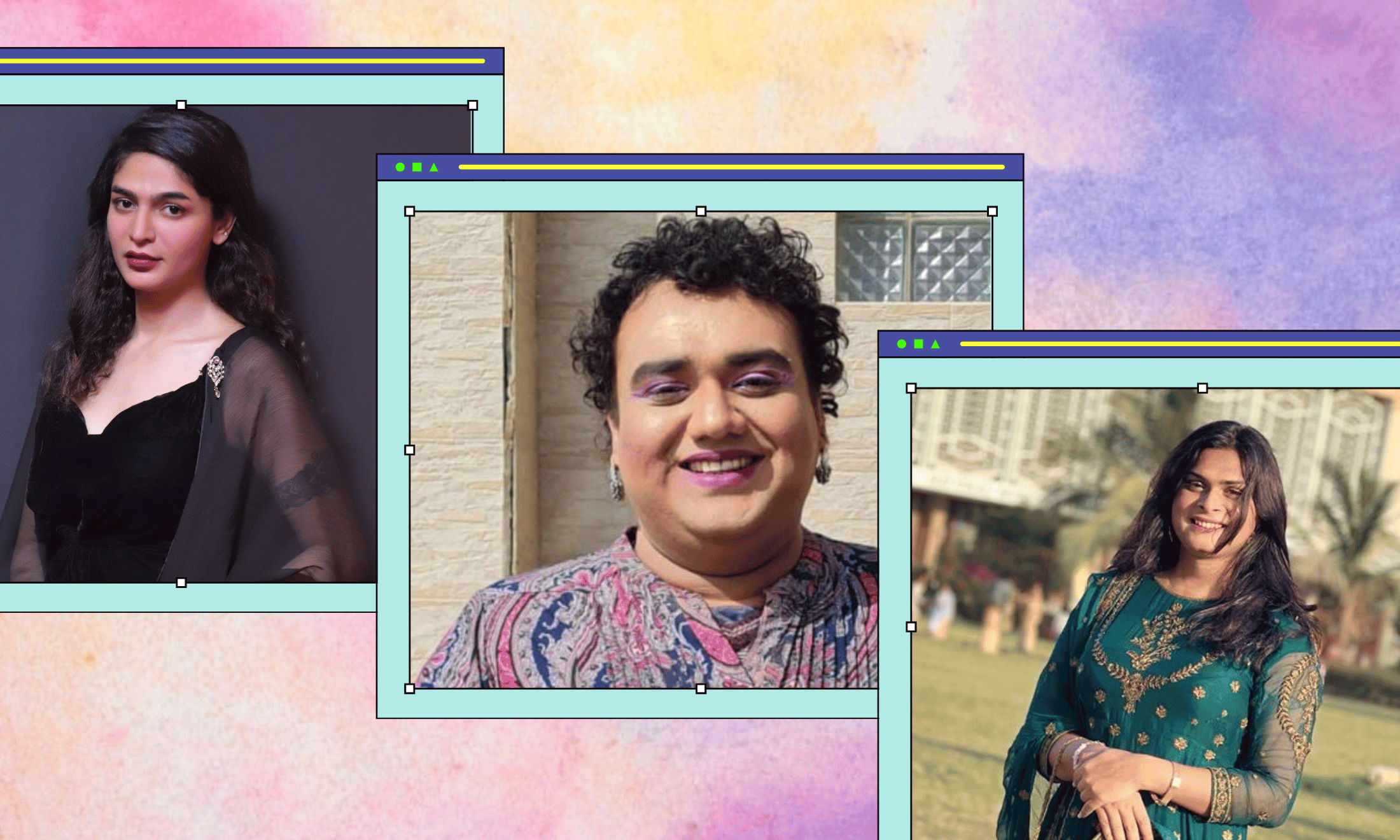

How Pakistan’s Khwaja Sira and transgender communities are fearing and fighting for their futures

Their anti-rape performance went viral globally. Now what?