In 2016, at a pivotal moment in her career, actor Leslie Jones became a victim of online abuse. After the release of the all-female Ghostbusters remake, Jones became a target for racist and misogynistic comments. It wasn’t until Jones began drawing attention to the hate that Twitter finally did something.

On 19th July 2016, Jones tweeted this, and logged out of Twitter:

I feel like I’m in a personal hell. I didn’t do anything to deserve this. It’s just too much. It shouldn’t be like this. So hurt right now.

— Leslie Jones (@Lesdoggg) July 19, 2016

Social media is an integral part of society. It allows people of all ages, races and religions to exercise freedom of speech. Unfortunately this freedom is abused on a regular basis and some individuals are more likely to receive online abuse than others. We must ask real questions about whether social media platforms are willing to step up and deal with online abuse correctly, and if victims are really protected.

Jones was inundated with racist comments and derogatory slurs. The harassment campaign was led by alt-right writer Milo Yiannopoulos (whose newly released book sold an embarrassing 152 copies in the UK) who claimed that Jones was “a man” and even shared fake tweets he claimed she had tweeted. This encouraged Yiannopoulos’ 380,000+ follower base to also subject Jones to similar torment.

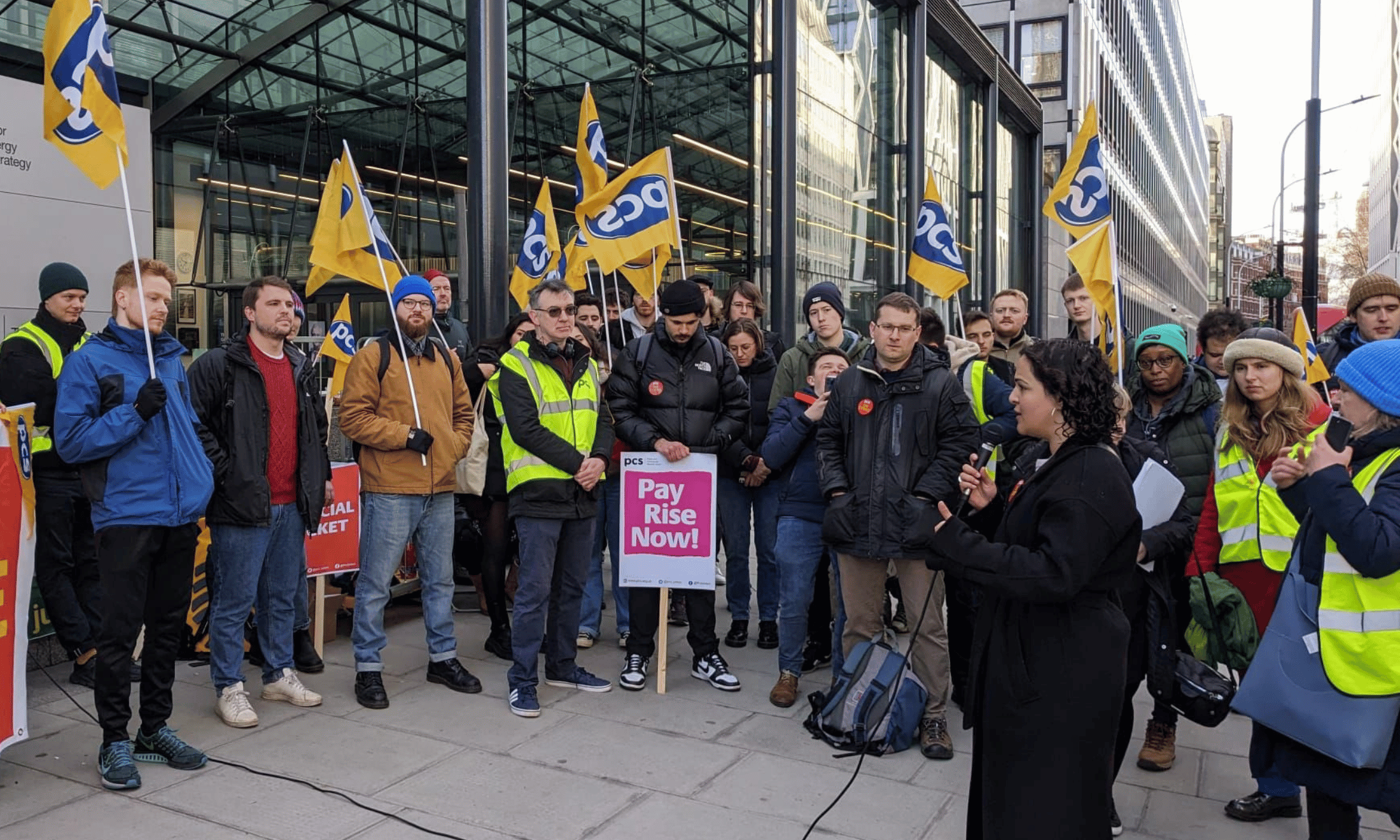

Yiannopoulos is well known for his bigoted vitriol against Black Lives Matter and the feminist movement. Despite this, the abuse Jones experienced was not taken seriously by Twitter, and she resorted to retweeting her own abuse and asking her followers for help in reporting it. It took Twitter two days to finally ban Yiannopoulos from the platform indefinitely, and to delete numerous other troll accounts. The hashtag #LoveForLeslieJ was created to raise awareness of the online abuse Jones was up against.

In response to the Leslie Jones case Jack Dorsey, the CEO of Twitter, updated the platform and created the “mute” feature to prevent users from being exposed to harassment and abuse. Twitter also introduced new filters to remove harassing tweets from timelines. Since February 2017 “less relevant replies” to Tweets (likely from newly set up accounts with little or no following) have been grouped and hidden at the bottom of a user’s Twitter timeline/feed. But it is clear that Twitter needs to step up its game.

In a study conducted by the think tank Demos in 2014, approximately 10,000 tweets per day contain some sort of racial slur. There is a specific distinction between freedom of speech and hate speech and this needs to be clarified. All social media platforms including Twitter, Facebook and Instagram have community standard guidelines that supposedly exist to govern users’ behaviour. But users still engage in abusive behaviour. Individuals can simply create a new account when a previous account gets deleted. From my personal experience, Twitter and Instagram are both slow to respond to abusive behaviour, especially when it is abuse directed at women of colour.

Many times I have reported accounts that are engaging in racist behaviour and it takes days, even weeks sometimes, to finally get them deleted.

More measures need to be taken to identify abusers and prevent serial abusers from making new accounts. Twitter would do well to cease bragging about their new updated security features and tackle the abuse that women of colour still experience on a daily basis.

The Labour MP Diane Abbott spoke out recently about the constant “mindless” abuse she recieves online, which she described as “characteristically racist and sexist”. During a Westminster Hall debate she talked about the constant death and rape threats that she receives on a daily basis, stating that abusers have described her as a “useless fat black”, “ugly fat black bitch”, and a “n*****”.

Abbott said:

“I’ve had people tweeting that I should be hung if they could find a tree big enough to take the fat bitch’s weight”

If Twitter fails to adequately respond to death threats levelled at the Shadow Home Secretary what hope is there for the rest of us?

Conversely, social media platforms have a more respectable track record when it comes to supporting white women who experience online abuse. In December 2016 Anna Soubry, a Conservative MP received a horrific tweet calling for someone to assassinate her; the tweet said that somebody should “Jo Cox her”. Soubry responded to the tweet and the man behind the abusive account was arrested and charged with sending a message that was grossly offensive and of an indecent, obscene or menacing nature.

I spoke with Chawntell, an Indian model, about online abuse on social media. I asked her whether she felt that social media platforms “have our [women of colour’s] backs”, and she pointed out that most platforms have no option to report racism, sexism, homophobia and so on.

Chawntell also explained:

“Living in a society which is ALL of those things we NEED specific ways to report it. Otherwise I feel like the platform itself can easily become a place where all of these things are practised freely”

If you report an account on Instagram, the options to detail why you are reporting include: “posting annoying content”, “spam”, “inappropriate content”, “the profile is pretending to be someone else” and that another user is “posting my intellectual property without authorisation”. There are no options to report someone for being racist or sexist.

Tahira, another woman of colour (WoC) stated that within the WoC community “there’s more support for each other especially when encountering abuse”.

I agree with both Tahira and Chawntell’s opinions. There seems to be more support coming from our community than social media platforms, which is good, but it is the responsibility of social media giants to protect us from and support us during experiences of online abuse. These huge, transnational corporations absolutely have the funds and the resources, but there is a distinct and notable lack of will and action.

Evidently social media platforms still have a long way to go to prevent online abuse to women of colour. Freedom of speech and hate speech need to be clearly defined in community standard guidelines, because at the moment these platforms are allowing online abuse to be practised. Whether this means creating more robust systems to detect abusive posts or comments, or hiring more individuals to review posts, steps urgently need to be taken.

Inaction on online abuse sends a clear message to women of colour that social media platforms do not have our back. Until real changes are enacted, we will continue to be the casualties of social media giants’ resistance to industry regulation and more rigorous moderation of their communities.

Britain’s policing was built on racism. Abolition is unavoidable

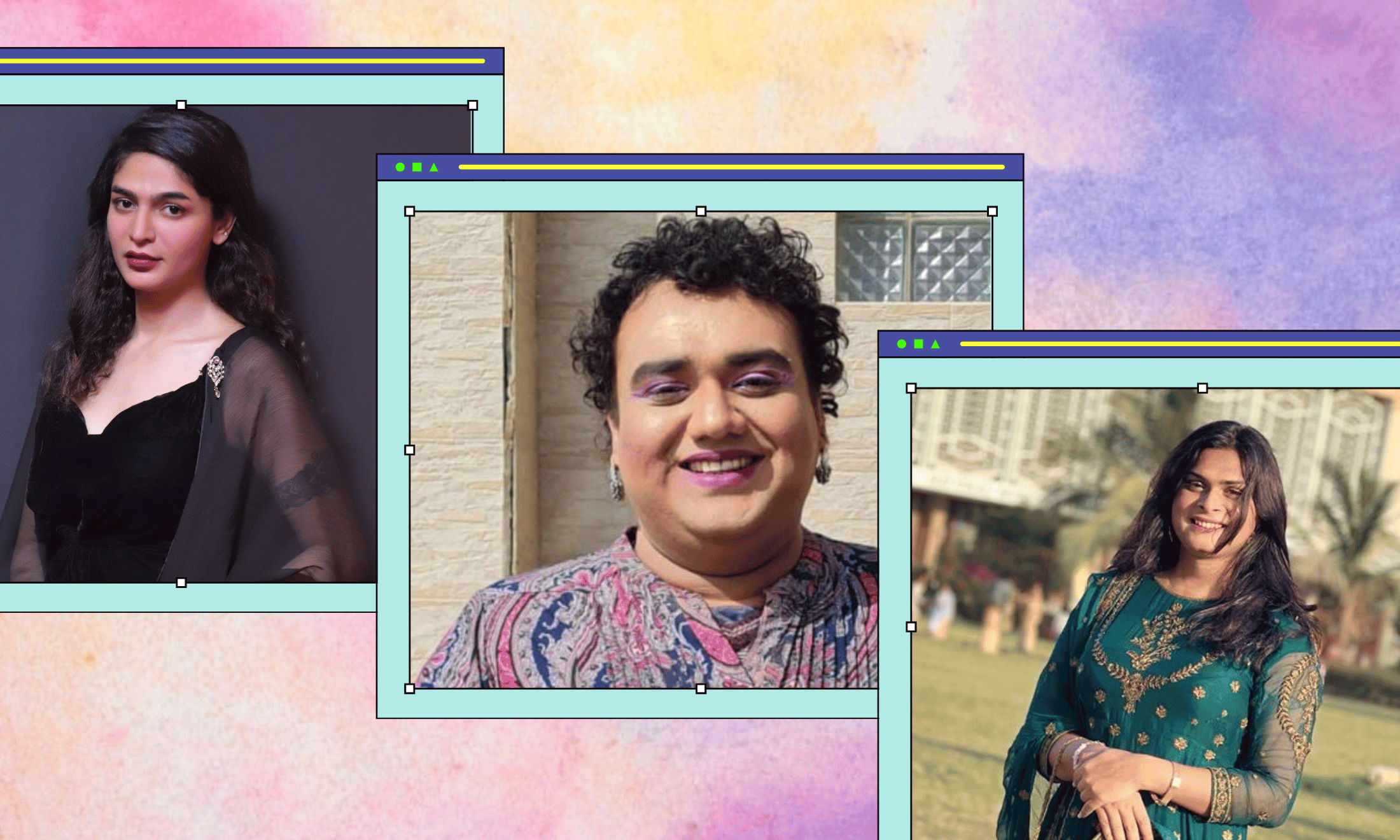

How Pakistan’s Khwaja Sira and transgender communities are fearing and fighting for their futures

Their anti-rape performance went viral globally. Now what?